A Comparison of Serverless Function (FaaS) Providers

New Function-as-a-service (FaaS) providers are rising and gaining adoption. How do these new providers differentiate themselves from the existing big players? We will try to answer these questions by exploring the capabilities of both the more popular FaaS offerings and the new players. We will look at where their focus lies, the limitations, what programming languages they support, and their pricing models. The goal is to build a reference for those looking to choose between providers. Since this should be a collaborative effort, feel free to contact us if you would like to see something added.

Before we dive in, let’s briefly discuss the advantages of FaaS over other solutions. Why would you choose to go serverless?

How does FaaS compare to PaaS and CaaS

Functions as a Service (FaaS) vs. Platform as a service (PaaS)

At the highest level, the choice between PaaS and FaaS is a choice of control versus ease of use, and a choice between architectures (monolith versus microservices). A platform such as Heroku is the first choice for many proof of concepts because getting started is easy and it has powerful tooling to make a developer’s life easier. Typically, such applications are monoliths that scale by adding nodes with another instance of the whole application (e.g., in Heroku terms: add more dynos). An application can go a long way on such a platform without running into performance problems. Still, if one part in your code becomes a bottleneck, this monolith approach makes it harder to scale your application. Once the load on your application increases, PaaS providers typically become expensive (1).

FaaS, on the other hand, requires you to break up applications into functions, resulting in a microservices architecture. In a FaaS-based application, scaling happens at the function level, and new functions are spawned as they are needed. This lower granularity avoids bottlenecks, but also has a downside since many small components (functions) require orchestration to make them cooperate. Orchestration is the extra configuration work that is necessary to define how functions discover each other or talk to each other.

| Advantages | Disadvantages | |

|---|---|---|

| Platforms (PaaS) |

|

|

| Functions (FaaS) |

|

|

Containers (CaaS) vs Functions (FaaS)

Although containers and functions solve a very different problem, they are often compared since the type of applications that are built with them can be very similar. Both provide ways to easily deploy a piece of code. In the container case, one typically still has to decide how many instances need to run while functions are 100% auto-scaling. There will always be one container running for a specific service, waiting until it receives a task, while a function only runs on request. Containers start up fairly quickly, but are not fast enough to catch up with quick bursts in traffic. In contrast, functions just scale along with the burst.

In general, containers require much more configuration, especially because scaling rules must be tweaked in order not to over- or underprovision. In FaaS, over- or underprovisioning becomes the concern of the provider while clients are provided with a pay-as-you-go model. The pay-as-you-go model is quickly becoming the definition of "serverless" since it abstracts away the last indication of servers from the developer. Of course, the servers that execute your functions are still there and the provider needs to make sure that there are enough of them when a client launches new functions. In order to make this manageable, there are typically memory, payload, and execution time limits in place. These limits make it easier for FaaS providers to estimate the load at a given time and are the prices we pay to be able to run code without caring about servers.

| Advantages | Disadvantages | |

|---|---|---|

| Containers (CaaS) |

|

|

| Functions (FaaS) |

|

|

FaaS providers compared

Focus: ease-of-use vs configurability vs edge computing. Of course, people choose a cloud ecosystem for different reasons (e.g., free Azure credits, deployment via CloudFormation, or a specific feature like Google Dataflow) and the logical choice is usually the provider you already use. If the ecosystem is not the deciding factor, the focus of the FaaS provider is often the reason why a company chooses a particular provider.

Generality and configurability:

The biggest providers, such as Azure, Google, and AWS, focus on configurability. Their function offerings are often a vital part of communication between other services of their ecosystem. The trigger mechanism is therefore separated from the function allowing the function to be activated by many things such as database triggers, queue triggers, custom events based on logs, scheduled events, or load balancers. If all you need is a REST API based on functions, then this might seem cumbersome. For example, when you write an API based on AWS Lambda functions, you need to write the function, deploy it, set up security rules to be able to execute them, and configure how they are triggered (e.g., via a load balancer). The number of configuration possibilities can make the documentation seem daunting.

Easy-of-use and developer experience:

Other providers such as Netlify and Vercel (formerly ZEIT) respond to that by focusing on a more narrow use case and ease-of-use. In this case, one command is often enough to transform the function into a REST or GraphQL API that is ready to be consumed. The starting barrier and learning curve is greatly reduced because the functions serve a more specific purpose (APIs). Besides that, they provide an impressive toolchain to easily debug, deploy, and version your functions. For example, both support file system routing which allows you to simply drop a function in a folder so that it becomes accessible as an API with the same path, which also works locally. Since the functions live on the same origin as your frontend code, you do not have to deal with CORs issues.

Their function offering also integrates perfectly with the rest of their offerings, which are aimed at facilitating JAMstack applications and typical Single Page Applications (SPAs). JAMstack sites are relatively static sites that make heavy use of serverless offerings to populate the smaller dynamic parts.

Vercel Functions: Deploy with the Now ecosystem and you have a scalable API under the /api endpoint that can also be simulated locally. When using Functions inside a Next.js app, you receive the benefits of Webpack and Babel.

Netlify Functions: Deploy with the Netlify CI by just pushing your code to Github. By integrating with their Identify product (similar to Auth0), Functions automatically receive user information. Netlify also provides one-click add-ons such as databases from which the environment variables will be automatically injected.

You can say that Netlify and Vercel compare to AWS/Azure Functions/Google Functions as a PaaS platform like Heroku compares to AWS: they abstract away the complexities of functions to provide a very smooth developer experience with less setup and overhead. Under the hood, they both rely on AWS to run their functions. Their free tier does not only include function invocations; it also comes with everything you need to deploy a website such as hosting, CI builds, and CDN bandwidth.

Edge computing

If low latency is necessary and data always needs to be real-time, then Cloudflare Workers, EdgeEngine, Lambda@Edge, or Fly.io are probably the best choices. Their focus is essentially edge computing, or in other words, bringing the functions as close as possible to the end-users to reduce latency to an absolute minimum. At the time of writing (November, 2019), Cloudflare Workers have 194 points of presence, EdgeEngine sports 45+ locations, and Fly.io is at 16 locations.

Most of them run functions straight on the V8 engine instead of NodeJS, which allows for lower latency than their competitors. Due to that, they initially only supported JavaScript but all have recently added support for WebAssembly which should (theoretically) allow most languages. Fly.io recently dropped the V8 runtime to allow for more intensive workloads and worked around cold-starts by keeping processes running. EdgeEngine and Cloudflare Workers are probably not meant for very CPU intensive tasks since the runtime limitations are expressed in CPU time. A function can exist for a long time, as long as it does not actively use the CPU longer than the runtime limitation. For example, an idle function that is waiting for the result of a network call is not spending any CPU time. This makes these providers a great choice to build a 'middleware' backend that spends most of its time forwarding and waiting on requests.

Fly.io differentiates by focusing on a powerful API and an open-source runtime which allows you to build and test your apps in a local environment. In a recent update, they moved away from the V8 engine in favour of docker which removed typical serverless limits and explains why their pricing is now longer based on the duration of a call. This turns Fly.io in a service to run docker images on servers in different cities. This service comes with a global router to connect users to the nearest available instance and the automatic addition of VMs on popular locations as your application's traffic increases. This makes Fly.io a great service to run heavier tasks close to the edge such as a full-fledged rails application.

It is noteworthy that Vercel provides a unique Edge Caching system which approximates the serverless edge experience. This system caches data from your serverless functions at configurable intervals, which gives users fast data access, although the data is not real-time.

Do it yourself

Finally, there is a whole new set of offerings that are starting to move away from the serverless aspect of FaaS. Many have bumped into the limits (payload, memory) of functions and then tried to get around those limits. The typical solution was to start running Docker containers. Frameworks like OpenFaaS, the FN Project, Fission, OpenWhisk, and Kubeless are aiming to provide a framework that allows you to deploy your own FaaS solution. They aim to deliver a similar experience to a true FaaS provider, but it’s important to note that this FaaS is all but serverless since you are again responsible for managing clusters and scaling. One can argue that deploying Kubeless on a managed Kubernetes service comes very close and it would not be surprising if these open source frameworks motivate other companies to start their own FaaS offerings. In fact, some of these already serve as the basis for a commercial service provider. OpenWhisk powers IBM's FaaS offering while a fork of the Fn project is the technology behind Oracle Functions. Fly.io also deserves a space in this category since they rolled out an open source runtime. The future will definitely be interesting; we expect to see FaaS offerings for each niche.

| Comparing the focus of FaaS providers | |||||

|---|---|---|---|---|---|

| Do it yourself | |||||

| Open-FaaS | Fn project | Fission | Open-Whisk | Kubeless | Fly.io* |

| Configurability | |||||

| AWS Lambda | Google Functions | Azure Functions | IBM Cloud Functions | Alibaba Functions | Oracle Functions |

| Ease-of-use | |||||

| Vercel Functions | Netlify Functions | Fly.io* | |||

| Edge computing | |||||

| Cloudflare Workers | Edge Engine | Fly.io | Lambda @Edge | Vercel Functions + Edge Cache* | |

A table categorizing all FaaS providers according to their main focus

Providers starred with * have noteworthy features in that domain although it’s not their main focus

Limits

One of the main pitfalls of using Functions as a Service is the limits. Some developers are not aware of those when they get started, or they underestimate how easily an application can bump into these limits over time. The number of workarounds that can be found online where results are stored temporarily on another location such as AWS S3 shows how many developers bumped into these. Do-it-yourself solutions are left out of the comparison since those typically allow extensive configuration of the limitations. These limitations are there for a reason; they make sure that the load remains predictable for the provider, which allows them to provide lower latencies and better scaling. Azure is the only provider that has no payload limits, which might explain why they are a bit behind performance-wise. Programmers have struggled with these limitations and have found several creative solutions around them. Some providers came up with their own solutions to improve the developer experience.

| Memory (MB) | Execution time | Payloads (MB) | ||||

|---|---|---|---|---|---|---|

| Default | Max | Default | Max | Request | Response | |

| Non-Edge offerings | ||||||

| Vercel Functions | 1024 | 3008 | 10s-900s2 | 10s-900s | 5 | 5 |

| Netlify Functions | 1024 | 10243 | 10s3 |

10s3 | 6 | 6 |

| IBM Cloud Functions | 256 | 2048 | 1m | 10m | 5 | 5 |

| Google cloud Functions | 128 | 2048 |

NA |

540s | 10 | 10 |

| Oracle Functions 4 | 128 | 1024 | 30s | 120s | 6 | 6 |

| Azure Functions 5 |

NA |

1536 | 5m | 10m | No limit | No limit |

| AWS Lambda | 128 | 3008 | 3s | 15m | 61 |

61 |

| Alibaba Functions |

? |

? |

? |

600s | 61 | 61 |

| Edge offerings | ||||||

| Cloudflare Workers | 128 | 128 | No limit 10ms CPU6 |

No limit 10ms CPU |

? |

? |

| EdgeEngine | 128 | 128 | 15s 5ms CPU6 |

15s 5ms CPU |

? |

? |

| AWS Lambda@Edge | 128 | 128 | 30s | 5s | 50Mb | 40 KB |

| Fly.io 7 |

NA |

NA |

No limit | No limit | No limit | No limit |

1: These are the specifics for synchronous requests. Asynchronous requests only provide 128Kb.

2: Vercel’s execution time limits change depending on the plan and range between 10 seconds and 15 minutes.

3: Netlify allows you to tweak your function limits (probably within AWS limits) for custom plans.

4: Oracle Functions also limit the amount of applications (10) and functions (20) per tenancy.

5: Azure Functions have no specified limits, but the host machine’s limit is 1.5Gb.

6: Cloudflare Workers and EdgeEngine are measured in CPU time. A function can use up to x ms of CPU time but can run for a long time as long as it’s waiting and not using the CPU. Cloudflare has a limit of 10ms or 50 ms CPU depending on the plan and no function duration limit while EdgeEngine has 5ms and a function limit of 15s and offers custom plans via sales.

7: Fly.io moved towards a global container runtime offering. The memory limits depend on the machine you select to run your container on, Besides of that there are no limits.

Pricing

The main four FaaS providers (Azure, AWS, Google, IBM) are very comparable. Only Google comes out more expensive as can be verified using this online calculator. The new kids on the block are slightly pricier since they do things quite differently. They abstract away a lot of work for you by automatically provisioning load balancers, or making the deployment process much easier with local development tools, debugging tools, versioning, etc. These providers aim to eliminate devops completely, so comparing their prices with bare-metal FaaS providers is like comparing Heroku pricing with AWS. Pricing for custom setups is left out since they will basically depend on your own infrastructure.

| Free tier | |||

|---|---|---|---|

| Requests | GB seconds | Hours / Month | |

| Non-Edge offerings | |||

| Netlify | 125K * | NA | 100 * |

| Vercel | NA | NA | 20 * |

| IBM Cloud Functions | NA | 400K | NA |

| Oracle Functions | 2M | 400K | NA |

| Alibaba Functions | 1M | 400K | |

| Google Functions | 2M | 400K | NA |

| AWS Lambda | 1M | 400K | NA |

| Azure Functions | 1M | 400K | NA |

| Edge offerings | |||

| Cloudflare Workers | NA | NA | NA |

| EdgeEngine | NA | NA | NA |

| Fly.io | $10/mo of service credit | ||

| Lambda@Edge | NA | NA | NA |

* : Both Netlify as Vercel have a pricing model which starts with different plans, starting with a free plan. Other plans start with a basic fee (which includes other services besides functions). Depending on the plan you choose, these prices might differ, and there are also custom plans available.

| Production pricing | |||

|---|---|---|---|

| Requests | GB seconds | Hours / Month | |

| Non-Edge offerings | |||

| Netlify | $0.000038 | NA | 1h per 1000 requests |

| Vercel | NA | NA | $0.2 / h |

| IBM Cloud Functions | NA | $0.000017 | NA |

| Oracle Functions | $0.0000002 | $0.00001417 | NA |

| Alibaba Functions | $0.0000002 | $0.00001668 | NA |

| Google Functions | $0.0000004 | $0.0000025 | NA |

| AWS Lambda | $0.0000002 | $0.00001667 | NA |

| Azure Functions | $0.0000002 | $0.000016 | NA |

| Edge offerings | |||

| Cloudflare Workers | $0.00000052 | NA | NA |

| EdgeEngine | $0.00000061 | NA | NA |

| Fly.io | $0.000001015 / sec 3 | NA | NA |

| Lambda@Edge | 0,0000006 | $0.00005001 | NA |

1: EdgeEngine starts with a $10/month subscription which includes 15M requests.

2: Cloudflare Workers starts with a $5/month subscription which includes 10M requests. Cloudflare Workers include storage which is billed separately.

3: Fly.io pricing depends on the selected instance since their recent update: https://fly.io/docs/pricing/

Languages

Most engineers prefer to program in a specific language. When moving to FaaS, this is not always possible since not every language is supported by the providers. Additionally, some languages have significantly higher cold starts than others. In theory, when deploying your own custom FaaS, any language is possible since they are built on top of Docker and meant to be extensible. Of course, that might require a lot of work when providers such as IBM Cloud Functions and Oracle Functions, who build upon OpenWhisk and Fn Project respectively, do not already have native support for all languages. This table provides an overview of supported languages per service. Only the darker green ones can be considered fully supported.

| A | B | C | D | E | F | G | H | I | J | K | L | M | N | O | P | Q | R | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

1 | OpenFaaS | Fn project | Fission | OpenWhisk | Kubel -ess | AWS Lambda | Google Functions | Azure Functions | IBM Cloud Functions | Alibaba Functions | Oracle Functions | Vercel Functions | Netlify Functions | Cloudflare Workers | EdgeEngine | Fly.io | Lambda@Edge | |

2 | JS | ✔︎ | ✔︎ | ✔︎ | ✔︎ | ✔︎ | ✔︎ | ✔︎ | ✔︎ | ✔︎ | ✔︎ | ✔︎ | ✔︎ | ✔︎ | ✔︎ | ✔︎ | ✔︎ | ✔︎ |

3 | Go | ✔︎ | ✔︎ | ✔︎ | ✔︎ | ✔︎ | ✔︎ | ✔︎ | ✕ | ✔︎ | ✕ | ✔︎ | ✔︎ | ✔︎ | ◆ | ◆ | ✔︎ | ✕ |

4 | Python | ✔︎ | ✔︎ | ✔︎ | ✔︎ | ✔︎ | ✔︎ | ✔︎ | ▲ | ✔︎ | ✔︎ | ✔︎ | ✔︎ | ✕ | ◆ | ◆ | ✔︎ | ✔︎ |

5 | Ruby | ✔︎ | ✔︎ | ✔︎ | ✔︎ | ✔︎ | ✔︎ | ✕ | ✕ | ✔︎ | ✕ | ✔︎ | ✕ | ✕ | ◆ | ◆ | ✔︎ | ✕ |

6 | Java | ✔︎ | ◉ | ✔︎ | ✔︎ | ✔︎ | ✔︎ | ✕ | ▲ | ✔︎ | ✔︎ | ◉ | ✕ | ✕ | ◆ | ◆ | ✔︎ | ✕ |

7 | C# | ✔︎ | ◉ | ◉ | ✔︎ | ✔︎ | ✔︎ | ✕ | ✔︎ | ✔︎ | ✕ | ◉ | ✕ | ✕ | ◆ | ◆ | ✔︎ | ✕ |

8 | C++ | ◉ | ◉ | ◉ | ◉ | ◉ | ◼︎ | ✕ | ✕ | ◉ | ✕ | ◉ | ✕ | ✕ | ◆ | ◆ | ✔︎ | ✕ |

9 | Elixir | ◉ | ◉ | ◉ | ◉ | ◉ | ◼︎ | ✕ | ✕ | ◉ | ✕ | ◉ | ✕ | ✕ | ◆ | ◆ | ✔︎ | ✕ |

10 | Haskell | ◉ | ◉ | ◉ | ◉ | ◉ | ◼︎ | ✕ | ✕ | ◉ | ✕ | ◉ | ✕ | ✕ | ◆ | ◆ | ✔︎ | ✕ |

11 | Erlang | ◉ | ◉ | ◉ | ◉ | ◉ | ◼︎ | ✕ | ✕ | ◉ | ✕ | ◉ | ✕ | ✕ | ◆ | ◆ | ✔︎ | ✕ |

12 | Cobol | ◉ | ◉ | ◉ | ◉ | ◉ | ◼︎ | ✕ | ✕ | ◉ | ✕ | ◉ | ✕ | ✕ | ◆ | ◆ | ✔︎ | ✕ |

13 | Rust | ◉ | ◉ | ◉ | ◉ | ◉ | ◼︎ | ✕ | ✕ | ◉ | ✕ | ◉ | ✕ | ✕ | ◆ | ◆ | ✔︎ | ✕ |

14 | PHP | ◉ | ◉ | ◉ | ◉ | ◉ | ◼︎ | ✕ | ▲ | ✔︎ | ✕ | ◉ | ✕ | ✕ | ◆ | ◆ | ✔︎ | ✕ |

15 | Swift | ◉ | ◉ | ✔︎ | ✔︎ | ◉ | ◼︎ | ✕ | ▲ | ✔︎ | ✕ | ◉ | ✕ | ✕ | ◆ | ◆ | ✔︎ | ✕ |

16 | Bash | ◉ | ◉ | ✔︎ | ◉ | ◉ | ◼︎ | ✕ | ▲ | ◉ | ✕ | ◉ | ✕ | ✕ | ◆ | ◆ | ✔︎ | ✕ |

17 | Powersh | ◉ | ◉ | ◉ | ◉ | ◉ | ✔︎ | ✕ | ▲ | ◉ | ✕ | ◉ | ✕ | ✕ | ◆ | ◆ | ✔︎ | ✕ |

◼︎ AWS Lambda runtimes in theory allow you to run any language but require extra work. At the moment, custom runtimes were announced, they open sourced C++ and Rust runtimes, while other partners developed runtimes for Elixir, Elrang, PHP, and Cobol.

▲ Azure has experimental support for these languages, but it is not advised to use them in production.

◉ Projects like OpenFaaS, Fn project, and OpenWhisk can in theory run any Docker container as a function. Therefore, any language is supported, but it might require extra work. For example, OpenFaaS requires you to create OpenFaaS templates, and OpenWhisk requires you to write something called Docker actions.

◆ via Web assembly so we assume at the moment that if it compiles to WebAssembly, it can be run.

Performance

Performance is typically the difference between an engaged customer and a bored client. FaaS performance is measured in function call latency. This is very hard to compare across providers for two reasons:

- Cold starts: When a function starts for the first time, it will respond slower than usual.

- Different idle instance lifetimes: Each provider keeps its functions alive for a different amount of time to mitigate cold starts.

- Language and technology-specific: Cold starts and execution time are very language-specific. The way the language is executed (compiled, interpreted, runtime, etc.) can have a significant impact on the performance. For example, both EdgeEngine and Cloudflare Workers run JavaScript straight on the V8 engine instead of on Node, which apparently decreases cold start latency significantly.

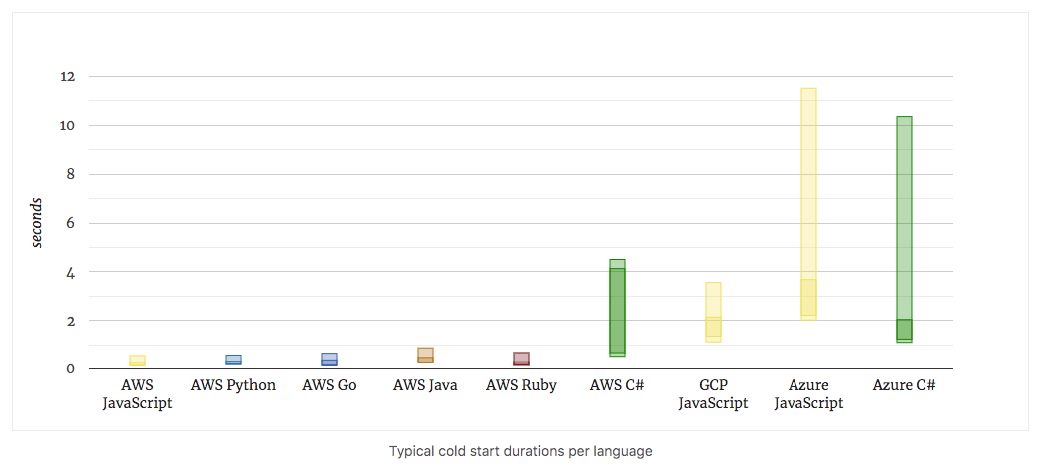

Between the three major providers, AWS is definitely still in the lead (1,2) in keeping cold starts low across all languages. They also exhibit the most consistent performance. Azure is last with cold starts and overall performance that is significantly worse than both Google and AWS.

(source: https://mikhail.io/serverless/coldstarts/big3/)

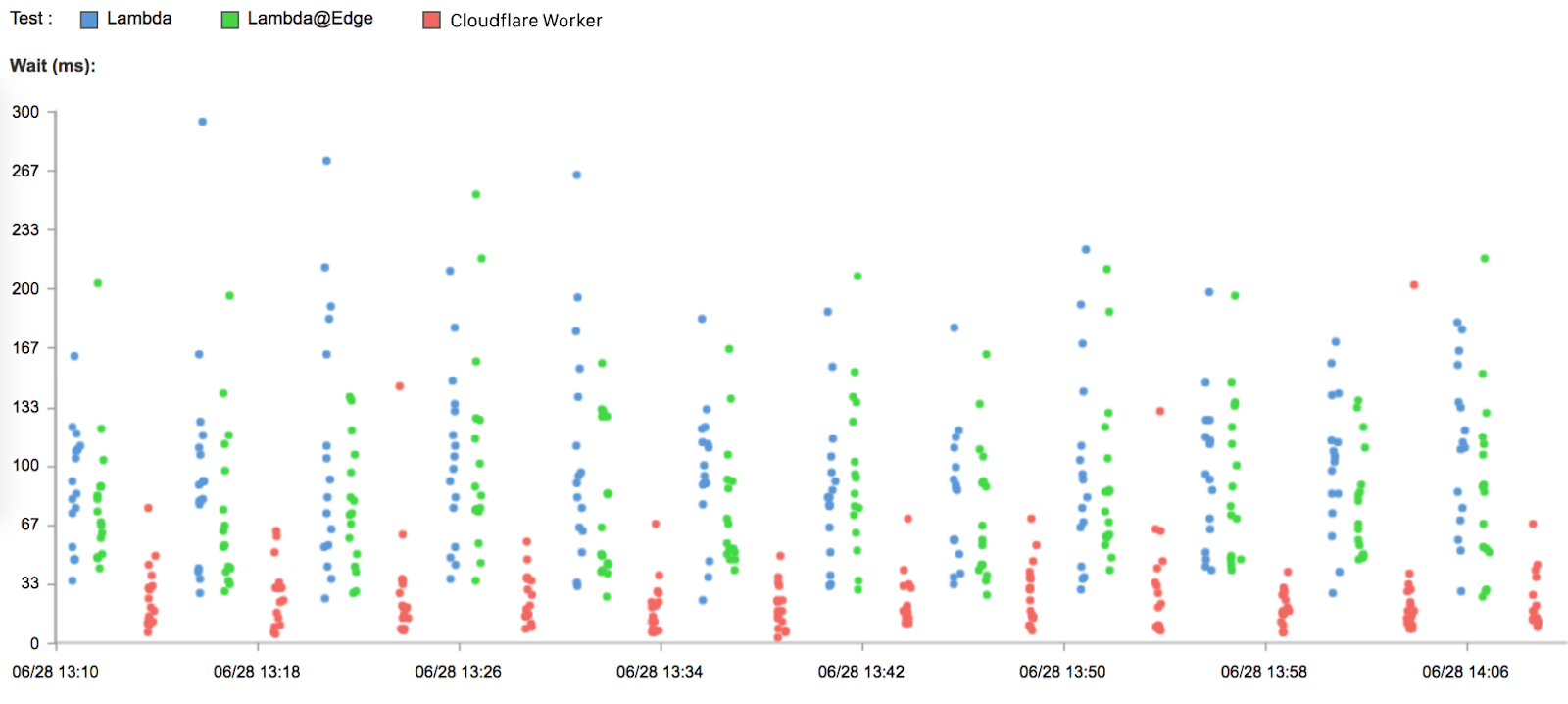

Cloudflare Workers and EdgeEngine both provide a lower cold start latency than regular FaaS providers thanks to the way they execute their functions. Both of these together with Lambda@Edge also aim to reduce the latency that is perceived by the caller by deploying functions in multiple locations and executing the functions as close to the caller as possible. Since Cloudflare Workers currently have most locations, they can probably provide the lowest average latency of the three edge providers. Cloudflare did a comparison between their own workers, Lambda, and Lambda@Edge from which the results indicate that Cloudflare is several times faster.

(Source: https://www.cloudflare.com/learning/serverless/serverless-performance/)

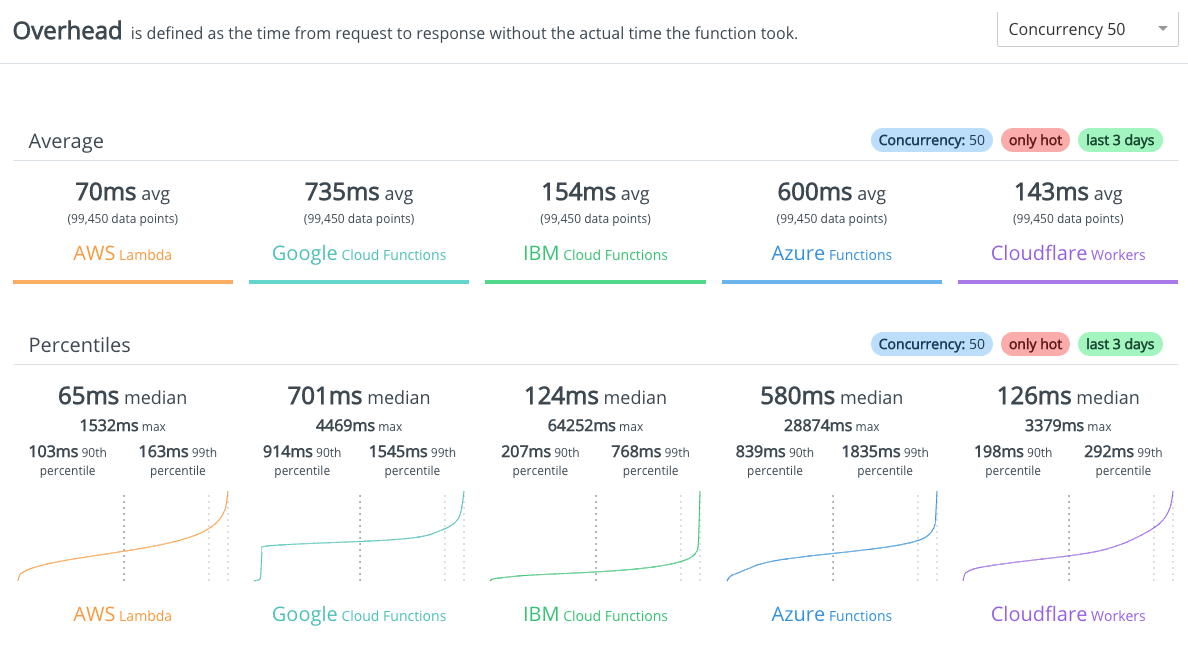

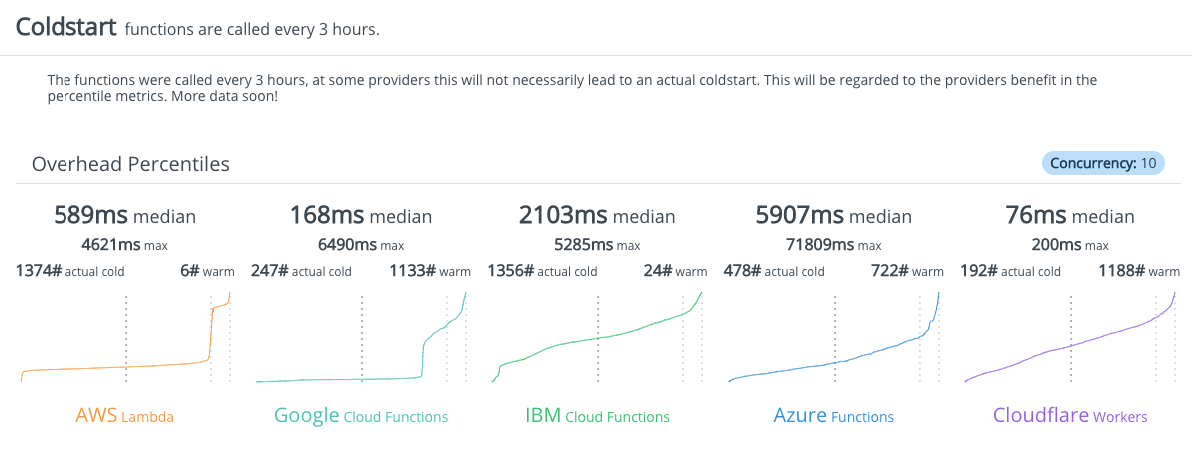

There is also an ongoing independent serverless benchmark project that is benchmarking both cold starts and execution time. It aims to extend the benchmarks to different providers than the main three and already has benchmarks for AWS Lambda, Azure Functions, Google Cloud Functions, IBM Cloud Functions, and Cloudflare Workers. The results are in line with what we expect: AWS Lambda is consistently the fastest for pure processing jobs yet is beaten in the domain of cold starts by Cloudflare. IBM Cloud Functions do very well, but also have very high worst-case latencies. Note that the results are continuously updated and the images provided here are snapshots. The results vary strongly from day-to-day.

The work of independent benchmarks is a great help to determine which functions are best suited for your problem. Providers like Vercel and Netlify that rely on another provider will probably exhibit the same performance as the underlying technology, which in both of these cases is AWS Lambda. At the time of writing, little work has been done to benchmark the performance of other frameworks.

For do-it-yourself FaaS frameworks, keeping cold start latencies low, scaling, and distribution are your responsibility. Different frameworks provide different configuration options for you to work with, but it will probably be extremely difficult to compete with true serverless providers.

Overview

In a global overview, this is how they all compare.

| A | B | C | D | E | F | G | H | I | J | K | L | M | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

2

|

AWS Lambda |

Google Function |

Azure Function |

Alibaba Function | IBM Cloud Functions |

Oracle Function |

Vercel Function |

Netlify Function |

Cloudflare Workers |

EdgeEngine | Fly.io | Lambda@Edge | |

|

3

|

Focus | general | general | general | general | general | general | Easy JAM, Edge |

Easy JAM |

Edge | Edge | Easy Edge | Edge |

|

4

|

Speed | +++ | ++ | + | ? | ++ | ? | ? | ? | +++ | ? | ? | ? |

|

5

|

Free tier | 1M reqs, 400K Gb/s |

2M reqs, 400K Gb/s |

1M reqs, 400K Gb/s |

1M reqs, 400K Gb/s |

Unlimited reqs 400K Gb/s |

2M reqs, 400K Gb/s |

20 hours /month | 100 hours /month | None | None | $25 | None |

|

6

|

Pricing | $0.0000002 / request $0.0000025 Gb/s |

$0.0000004 / request $0.00001667 Gb/s |

$0.0000002 / request $0.000016 Gb/s |

$0.0000004 / request $0.00001668 Gb/s |

$0.000017 Gb/s |

$0.0000002 / request $0.00001417 Gb/s |

$0.2 / h | $0.000038 /request | $0.0000005 /request |

$0.0000006 /request |

$0.000001015 / sec | $0.0000500 1/request |

|

7

|

Languages | JS Go, Python Ruby Java C# PowerSh |

JS, Go, Python |

JS, C# |

JS Python Java PHP |

JS, Go, Python, Ruby, Java, C# |

JS, Go, Python, Ruby |

JS, Go, Python |

JS, go |

JS Webassembly |

JS Webassembly |

JS | JS Python |

|

8

|

Memory Limits | 128-3008 | 128-2048 | ?-1536 | 128-2048 | 128-1024 | 1024-3008 | 1024-1024 | 128-128 | 128-128 | 128-128 | 128-128 | |

|

9

|

Execution time Limits | 15m | 9m | 10m | 10m | 10m | 2m | 15m | 10s | No limit 10ms CPU | 15s | ? | 5s |

|

10

|

Payload request | 6Mb | 10Mb | No limit? | 6Mb | 5Mb | 6Mb | 5Mb | 6Mb | ? | ? | ? | 50Mb |

|

11

|

Payload response |

6Mb | 10Mb | No limit? | 6Mb | 5Mb | 6Mb | 5Mb | 6Mb | ? | ? | ? | 40Kb |

Conclusion

A while ago, choosing a FaaS provider was a relatively easy task. Today, we are spoiled with diversity since FaaS providers are springing up like mushrooms. With such a wide range of providers, it becomes harder and harder to follow up on what exists and how they differ. Realizing that some new offerings have an entirely different focus (easy-of-use, edge, do-it-yourself) already brings you one step closer to choosing the right provider. In an attempt to make your choice more comfortable, we have researched and compared various providers on topics such as focus, limitations, pricing, languages, and performance. We hope that this becomes a basis for further discussion as such a comparison is ideally a collaborative effort.

The Fauna service will be ending on May 30, 2025. For more information, read the announcement and the FAQ.

Subscribe to Fauna's newsletter

Get latest blog posts, development tips & tricks, and latest learning material delivered right to your inbox.